Summary: Vast AI is the best on-demand service for individual and academic use, provided that the user is comfortable working with cloud servers.

Google Colab provides a user-friendly web interface (essentially a Jupyter notebook) with a consistently available V100 card at around $0.5/hour.

The threshold for renting reserved servers is high, particularly in terms of pricing and scale.

If feasible, purchasing the actual hardware proves to be more cost-effective in the long run. In such cases, the RTX 4090 is the optimal choice.

Cloud GPU Services #

The table below lists the pricing for various cloud GPU services. Please note that the availability of some on-demand services is limited, as indicated by the (*) symbol in the table.

| Service | H100 | A100 40GB | 4090 | A6000 | V100 |

|---|---|---|---|---|---|

| Vast AI (on-demand, hourly) | $0.8 - 1.0 | $0.4 - 0.6 | $0.4 - 0.6 | $0.2 - 0.3 | |

| Vast AI (reserved, monthly) | $0.7 - 0.8 | $0.4 - 0.5 | $0.4 - 0.5 | $0.2 - 0.3 | |

| Lambda Lab (on-demand, hourly) | $2.5 (*) | $1.3 (*) | $0.8 (*) | ||

| Lambda Lab (1 year contract) | $2.3 (min 64 GPUs) | ||||

| Lambda Lab (3 year contract) | $1.9 (min 64 GPUs) | ||||

| Google Colab (on-demand, hourly) | $1.3 (*) | $0.5 | |||

| Google Cloud (on-demand, hourly) | $2.5 | ||||

| Microsoft Azure (on-demand, hourly) | $27.2 for 8 | $3.1 | |||

| Amazon AWS (on-demand, hourly) | $98.3 for 8 | $32.8 for 8 | $3.1 |

The star symbol * indicates that the service has very limited availability.

Comparison of Key Features #

It’s important to note that the user experience varies significantly between Lambda Labs, Vast.ai, and Google Colab:

| Feature | Lambda Labs | Vast.ai | Google Colab |

|---|---|---|---|

| Ease of Use | User-friendly | Less straightforward | Very user-friendly |

| Usability | Pre-configured for AI | SSH / Jupyter access | Intuitive web interface |

| Availability | Hard to get any GPU | Diverse GPU options | V100 and T4 only |

| Cost | Affordable | Very affordable | Affordable |

| Stability | High (cloud standard) | Relatively lower | High but has time limits |

GPU Specifications #

The performance of GPUs can differ based on specific workloads. For a general comparison in deep learning tasks, refer to the table below:

| GPU | FP16 (half) | FP32 (single) |

|---|---|---|

| H100 80GB PCIe | 3.3 | 5.3 |

| A100 40GB | 2.3 | 3.5 |

| RTX 4090 | 2.0 | 2.9 |

| RTX A6000 48GB | 1.5 | 2.1 |

| V100 16GB | 1 | 1 |

The numbers represent the relative speed of cards compared to a V100 16GB card. See the Deep Learning GPU Benchmarks from Lambda Lab.

Surprisingly, the NVIDIA 4090 GPU performs nearly as well as an A100 40GB card. The high cost of V100, A100, and H100 is due to their optimization for data-center use.

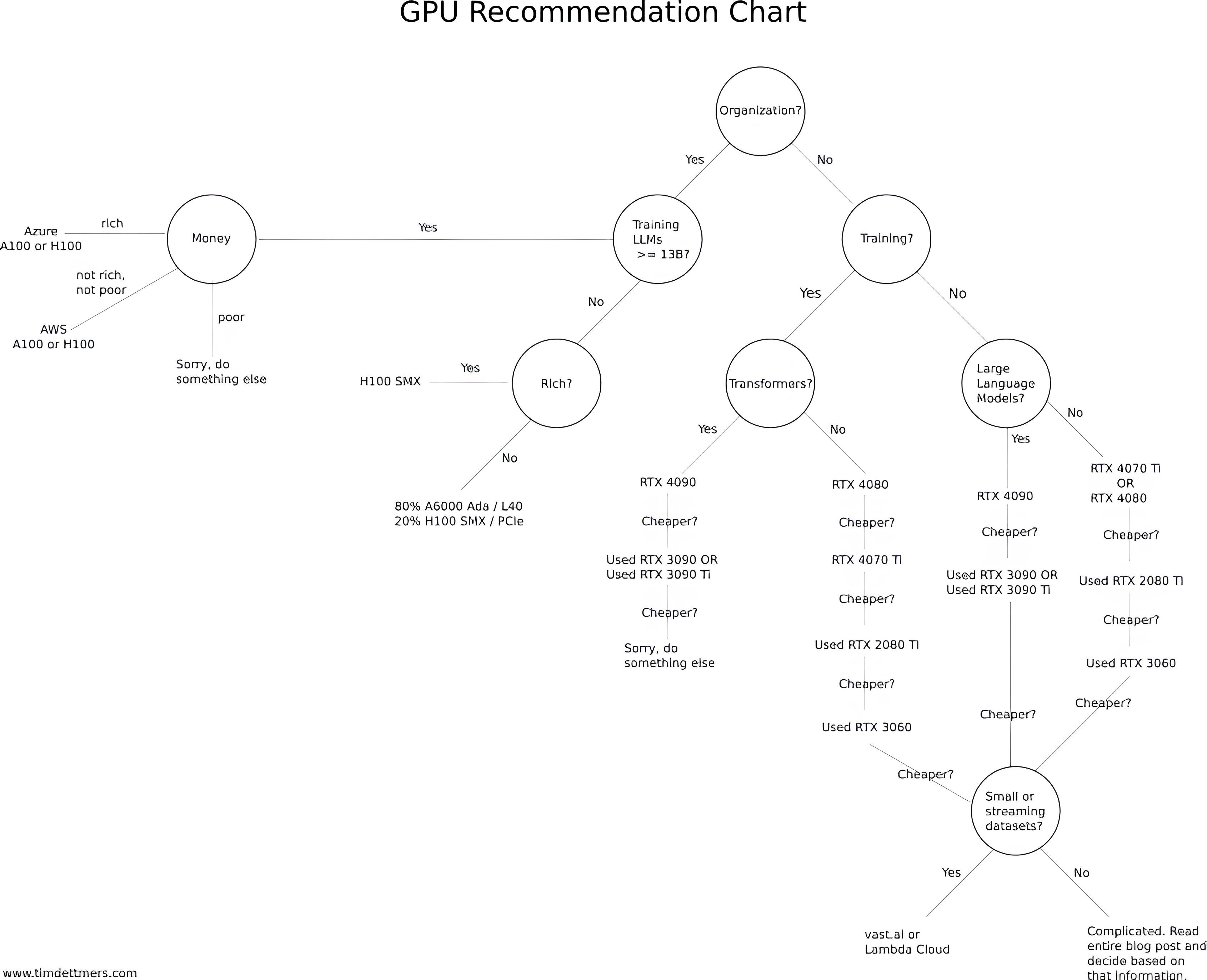

For a comprehensive discussion, refer to Tim Dettmers’ article: The Best GPUs for Deep Learning - An In-Depth Analysis.

Other Remarks #

TPU: Google Colab provides TPU services at $2/hour.

Direct Machine Purchase: When possible, buying and assembling a machine can be an excellent choice.

High GPU Memory Device: For training tasks that require substantial GPU memory, the Mac Studio with the M2 Ultra chip is the most cost-effective choice.